So the following is purely for fun in response to @mark 's post imagining how this would be done. I did follow up on this over the weekend and got something "working"

I heavily modified the project I mentioned earlier by manually rolling it over to the Tensorflow Lite c_api (a real pain!), then porting it to Windows, and feeding it the deeplabv3_257_mv_gpu.tflite model. To make it useful to Isadora, I dusted off / updated an openCV to Spout pipeline in c++ that I used a few years ago for some of my live projection masking programs, so now my prototype can receive an Isadora stage, run it on the model, and output the resulting mask to Spout again for Isadora to use with the Alpha Mask actor.

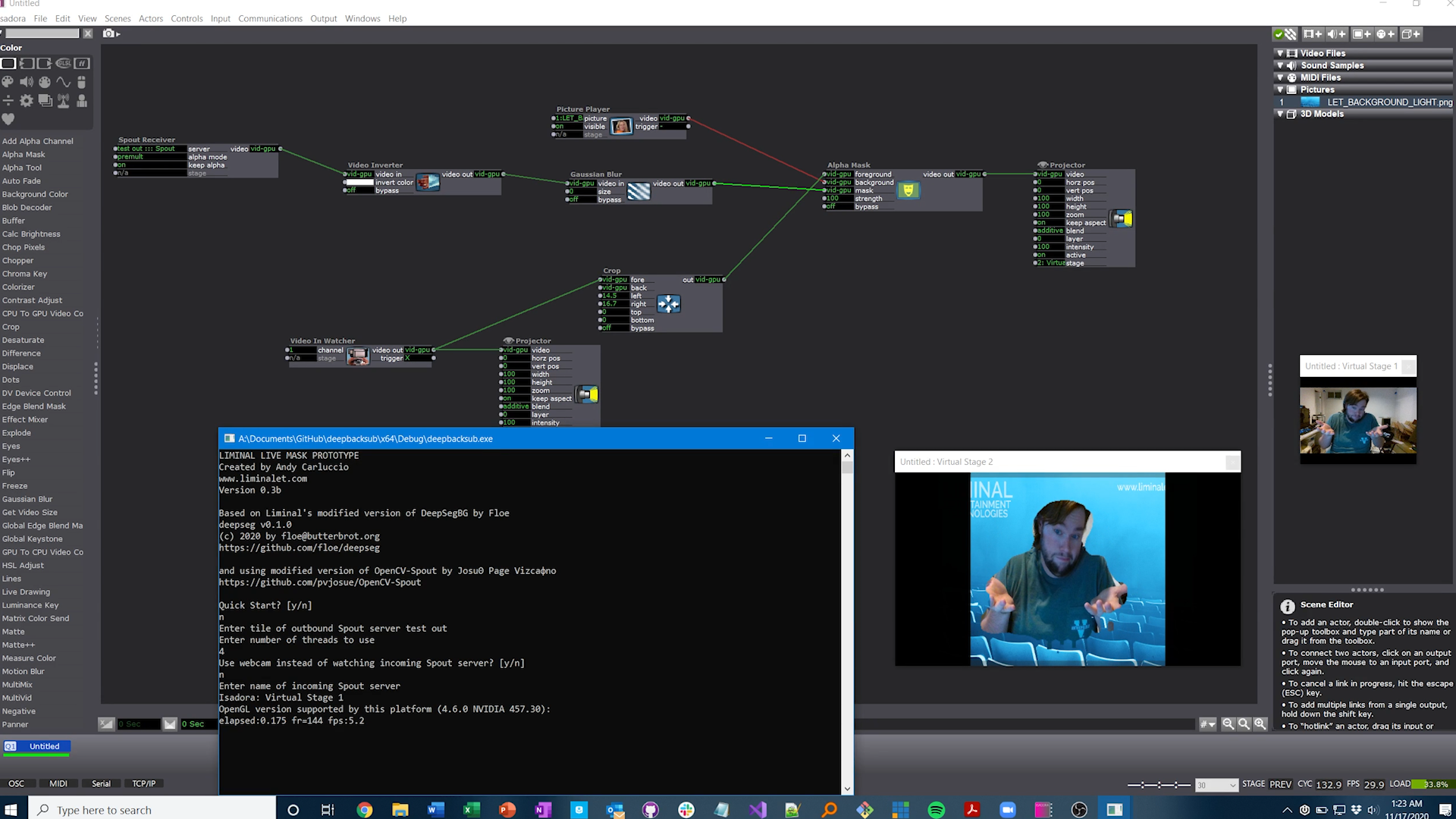

My results:

Now obviously, this is insane to actually attempt for production purposes in its current form. I'm getting about 5fps (granted no GPU accelerated and I'm running in debug mode). I could slightly improve things by bouncing back the original Isadora stage on its own spout server, but this is just a proof of concept. In this state, it should be relatively easy to port to Mac/Syphon and add GPU acceleration on compatible systems for higher FPS and / or multiple instances for many performers.

Again, just a fun weekend project but I found it very educational.