OpenNI Tracker tutorial suggestions

-

Hi @mark,

Congratulations on the work you have done to bring the Isadora OpenNi Tracker module out. The tutorial resource is a great companion with great templates for skeleton tracking. Thank you for inviting comments and suggestions for additional tutorial segments. The forum thread that you have used to invite comments does not appear to allow replies, so I am commenting here... One thing I find remarkable about depth cameras are their ability to calibrate depth. It would be of great benefit to see a demonstration of some of the ways that points in physical space can be defined as zones for triggering or modifying. These points are in the z plane of a space, but could be anywhere in the vertical and horizontal. How do you establish a 3 dimensional network of hotspot points from the depth image? A participant moving through and around the tracking space might then register a position within Isadora. This position and volumetric data might then be used to modify spatial effects, such as lighting or activate an object based on participant proximity.

I am sure there are any number fantastic tutorial possibilities concerning the capabilities of the OpenNI Tracker that will be of interest for many users. The tutorial I have suggested is a familiar use for depth cameras in an installation or participatory setup.

Best Wishes

Russell

-

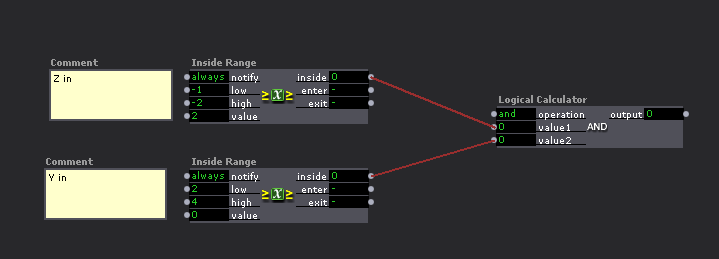

Hi. I think there might be a way to do this with the inside range actor and the logical calculator actor. I need some time to work on this though.

In theory the Z inside range can be found; say the zone (imagine a box) exists in -1 to -2 but the full range is 10 to -10 if a person is inside this range then the inside range actor gives you a 'true' which is 1.

Then do the same for Y (horizontal) if the box/zone exists in 2 to 4 but the full range is 10 to -10 if a person is inside this range then the inside range actor gives you a 'true' which is 1.

When the logical calculator has a 1 and 1 it then triggers a 1 - this can then be used to do anything you want.

-

@skulpture said:

do this with the inside range actor and the logical calculator actor

Hi Graham,

That looks really interesting and conceptual, I will have to try it.

I was thinking in terms of the OpenNI Tracker and what might be possible with the 'depth min cm' and 'depth max cm'.

There is an indication that limiting the depth range in bands and slices through 3D space will allow a series of simultaneous hotspots to be active. A participant will be detected and represented in the depth image within a band/slice at a defined z distance. The properties are to be calibrated for a corresponding depth slice and distance from the camera. What is not clear is how fast the OpenNI Tracker would allow the band/slice position to cycle and shift these properties to allow simultaneous slices and hotspots to be simulated effectively.

Thinking about it now it appears a bit complex and fiddly. There might be other ways to think about it?

Best Wishes

Russell

-

@bonemap said:

@skulpture said:

do this with the inside range actor and the logical calculator actor

Hi Graham,

That looks really interesting and conceptual, I will have to try it.

I was thinking in terms of the OpenNI Tracker and what might be possible with the 'depth min cm' and 'depth max cm'.

There is an indication that limiting the depth range in bands and slices through 3D space will allow a series of simultaneous hotspots to be active. A participant will be detected and represented in the depth image within a band/slice at a defined z distance. The properties are to be calibrated for a corresponding depth slice and distance from the camera. What is not clear is how fast the OpenNI Tracker would allow the band/slice position to cycle and shift these properties to allow simultaneous slices and hotspots to be simulated effectively.

Thinking about it now it appears a bit complex and fiddly. There might be other ways to think about it?

Best Wishes

RussellThat could work. I see these as settings rather than a way of dynamic interactive control. My workflow would be to set this up to define the 'active area' of the space, and then use the outputs from the actor to calculate the tracking.

I can see your think and it may very well work, but not sure if that's how I would do it.

-

@skulpture said:

use the outputs from the actor to calculate the tracking

Hi Graham,

I think your are probably right. The skeleton tracking provides a z index of a participant while tracking and this could be easily translated into a grid of zones.

I was really curious about the depth slices I love the way you can calibrate the depth to slice up space.

best wishesRussell