[ANSWERED] Waterfall (particles) simulation

-

Hello everyone ! I am working with particle systems and I cannot generate a descending cascade effect, since I do not see the possibility of generating particles on the ENTIRE X axis simultaneously. Any suggestions?

My idea after achieving this is (through motion tracking) that the audience can interact in some way with that waterfall (particle-waterfall).

Something like this

Thanks a lot!

Best,

Maxi-RIL -

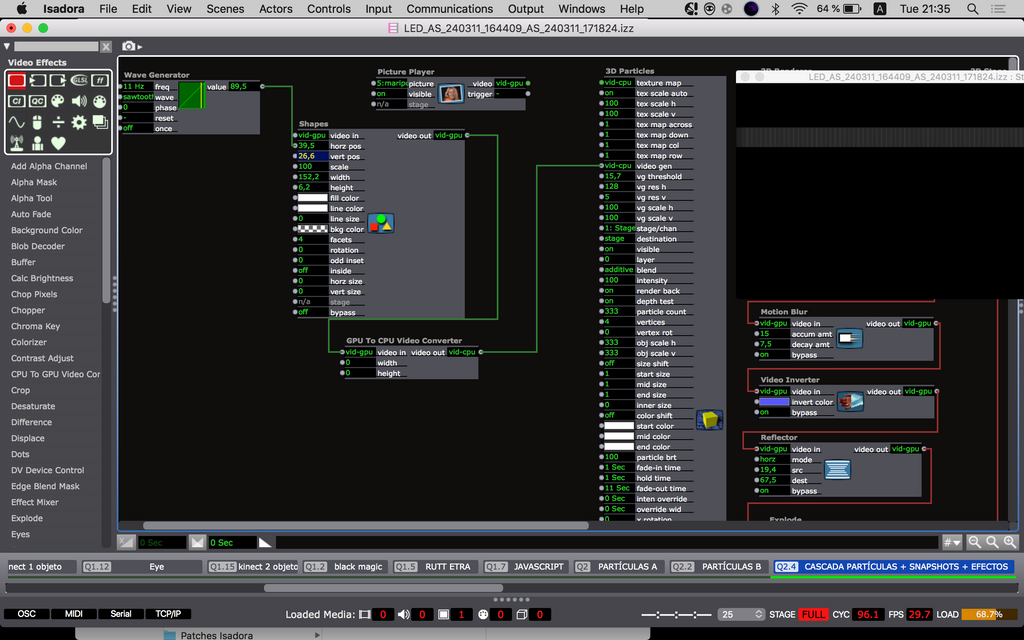

There is actually a way to generate particles across the whole x axis simultaneously by using the Video Gen input. if you use a Shapes actor to generate a line across the top of the screen and convert this to CPU, you can send this to Video Gen. Change the vertical resolution of the VG to something really low. Its a bit less predictable than generating particles by location, but should work. the video input needs to be something that is constantly moving, so i cheat this by vibrating the shape using a Wave Generator.

-

@dbini oh what a great news you gave me !

So you say I must use the GPU to CPU converter at the shape's video output? Or I missunderstang?

Thanks a lot !

Best,

Maxi

-

@dbini If you can point me in the right direction. Im doing something wrong. At times something happens but it's not right. I don't know how to use Shapes properly (among other things haha)

Thank you !

Maxi -

that patch is looking OK. i think it would help if you increase the Particle Count to something like 10,000. the VG system generates a lot of particles and, if yours have a lifespan on 11 seconds, it will soon reach its limit at 333.

also - i thought it might be good to use a more varied video input instead of Shapes. maybe it would look better with a line of text that is constantly changing - this might give you a more organic stream of particles. -

Hi,

Love where this thread is going. Here is a demonstration patch that shows a method of live particle interaction using 'Gravity Field' parameters. It isn't optical flow but it mimics the effect demonstrated in the TD video. I have used a couple of shape actors here, but also tried it with iKeleton OSC input and it worked great with human body tracking driving the shapes.

interactive_particles.zip

Best Wishes

Russell

-

@bonemap It looks incredible ! thank you

I see that you used the 3DModel actor, instead of the 3D particles like I was doing

Now the question would be how to replace those shapes with human interaction? to reach the same visual result.ps: at least on my computer the 2 shapes with the Path macro bring the Load level to 80 percent

Best,

Maxi-RIL -

Hi,

I would have to say that using Isadora particles in general requires a lot of cpu processing power. It is not the Shapes and Circular Path that is creating load - it is the particle system and the real-time interactivity computation. On my machine the load is under 40% but I would say it is still high. You can reduce the load by reducing the number of particles and reducing the rate of the top pulse generator. Many of my patches that include particles are carefully managed as they can quickly load the cpu. There may also be memory leaks and bugs in these old Isadora modules (that are arguably due for an update).

The other question you have about human body tracking is something you can tackle from several angles. However, I would be developing the body tracking and then bringing it to the particle system. For example, I believe skeleton tracking is well documented for Isadora. I used the iKeleton OSC iPhone app wrist parameter stream to move the Shapes actor in my interactive particles patch using my hands. The wrist OSC x and y data is connected to the horizontal and vertical position inputs of the shape actor and then calibrated using Limit-Scale Value modules.

You can try connecting a live stream of your performer directly to the Gravity Field video input of the 3D Model Particles actor it has a Threshold calibration parameter there as well. However, if your computer is already showing a high load with my simple patch it is only going to get worse asking it to compute even more.

Best wishes

Russell

-

@bonemap thanks bonemap!

I did a quick test and the Video in Watcher turned out to be more efficient than the 2 shape actors (with the Threshold almost at 100 %)

What I don't quite understand is that "grid" of square points that appears if I increase GFh and GFv. I understand that it is the resolution of the GF of the video input but what I still don't understand why they are seenps: Other important variables: lighting and distance of the object/body with respect to the camera and amount of threshold

Best,

Maxi

-

@ril said:

You are right about the efficiency of the Video in Watcher. This is an excellent option for live-stream video interactivity. Thanks for trying it out. One reason it works so well is that the settings I have used for the gravity field parameters are set so low in terms of resolution. This is great to remember for future project development!

I understand that it is the resolution of the GF of the video input but what I still don't understand why they are seen

There is a parameter to hide the dots 'gf visualize' can be toggled on/off. The important purpose of this is so that you can accurately calibrate the simulation to the input video using the 'gf scale h', 'gf scale v', 'gf res v' and 'gf res h'. In my example, it was critical to see the gravity field dots align with the shape actors' input video (and I had forgotten to toggle off the parameter before posting the patch).

It's great that you are progressing with the gravity field video input. Please post some discussion of how you arrive at an outcome.

Best wishes

Russell

-

I did a second quick test using Mark Coniglio's video of the hand with a black background and your patch works incredible!

What you would have to think about/achieve is in an installation situation how to track bodies efficiently without having black backgrounds and defined shiny objects like in Mark's video. With that we can acomplish to start developing immersive situations with Isadora!!

Best, Maxi-RIL

-

Previously, I have used several methods for isolating performers. A depth camera (Kinect etc.) video feed because this isolates the human figure by calibrating a depth plane/distance, i.e. OpenNi. However, you can also use the 'Difference' video actor to isolate just the moving elements of a live video input. There are also thermal Imaging cameras (I have an old flir sr6).

Best Wishes

Russell

-

@bonemap the Difference Actor made the "difference" ..works great !

ps: How do I insert the video right here so you can see it?

-

Great to hear what a difference makes!

If you want to share a video you can try one of the following:

Upload to YouTube or Vimeo, and then paste the link here.

Take a screen grab with a gif-making tool like Giphy Capture and drop the .gif file into the forum thread (if you do this, you will need to keep the .gif file to under 3 mb, or it will not upload)

Best wishes,

Russell

-

.

-

-