Hey all,

I'm in a situation designing a show at a university that uses Watchout. I know nothing about Watchout, but I was hoping to design via Izzy. It's not a tremendously tough show but they don't want to recable or dismantle their Watchout system. I'll be honest -- nobody seems to know all that much about Watchout at the university so I'm speaking from a place of profound ignorance. I guess my question is: can Isadora be used "through" Watchout? As I understand it, there are multiple computers running multiple projectors via the Watchout dongle and they don't want to dismantle that...so is there a way that they can integrate? If it is possible, we'll have a Watchout programmer, so it won't be me doing it (thankfull). I searched the forum and all I found was something from 2015. Any thoughts greatly appreciated!

Jake

Hi! Is there a way to manipulate the fps of a movie player/projector directly from the control interface, without having to go to preferences>target framerate? Thanks

Dear community,

I am using the "Midi enable" actor in my patch and I am trying to do the same thing for OSC, but I couldn´t find such actor. Could someone please point me in a direction how to enable/disable incomming OSC, based on a certain status? Thank you very much helping a fresher!

Best wishes,

Hendrik

Dear community,

I am looking for a way to move a comment actor describtion to the front but can´t find the common "move to the front" option like in a drawing software. So I was wondering if it is not possible at all or if I am just not clever enought?

Thank you for your support!

Hi, I've just tried installing isadoramac-326f00-std.dmg on a MacBook Pro 16-inch 2023 M2 Max running Venterua 13.0 and get the prompt...

To install "Isadora 3 - Standard Version (Intel/ARM)" you need to install Rosetta

i was expecting Isadora to run natively without requiring Rosetta (since i'm not using OpenNi Tracker or any other specific add-ons), so how should i best proceed?

Have also just upgraded from Ventura 13.0 to 13.2.1, with the same prompt. And have logged a technical support ticket

Rgds, Mr J

Hi all,

I would love a little more sophistication of how to deal with internal cues. Right now, there are times that I need to build multiple cues within a single scene. I will use a sequential trigger to sequentially fire the internal cues and then a jump when ready to go onto the next cue. I've nothing against this, it works well, but I would love it if there was a way to incorporate that into the Isadora Cue Numbering system.

Perhaps a Cue Actor (or Trigger Cue Actor) of some sort (perhaps something that uses the new address method that is similar to the OSC address?) If there was a Cue Actor that could go into a scene and then maybe add multiple cue numbers to the scene / and the scene select in the control panel (maybe they live tabbed in under the scene they live in) then you could better identify internal cues.

This could be useful also when using Midi Show Control or the Jump to Cue actor as you could trigger a cue that may be part of a sequence of cues that lives inside a scene.

Also, I'd love an Activate Cue Actor. Working like the Activate scene, but with the Cue Number function of the Jump to Cue Actor. Would be a useful way to activate background scenes without having to know where exactly they live in your cue stack.

Thanks so much,

Cheers,

Cam.

Hi, smart people.

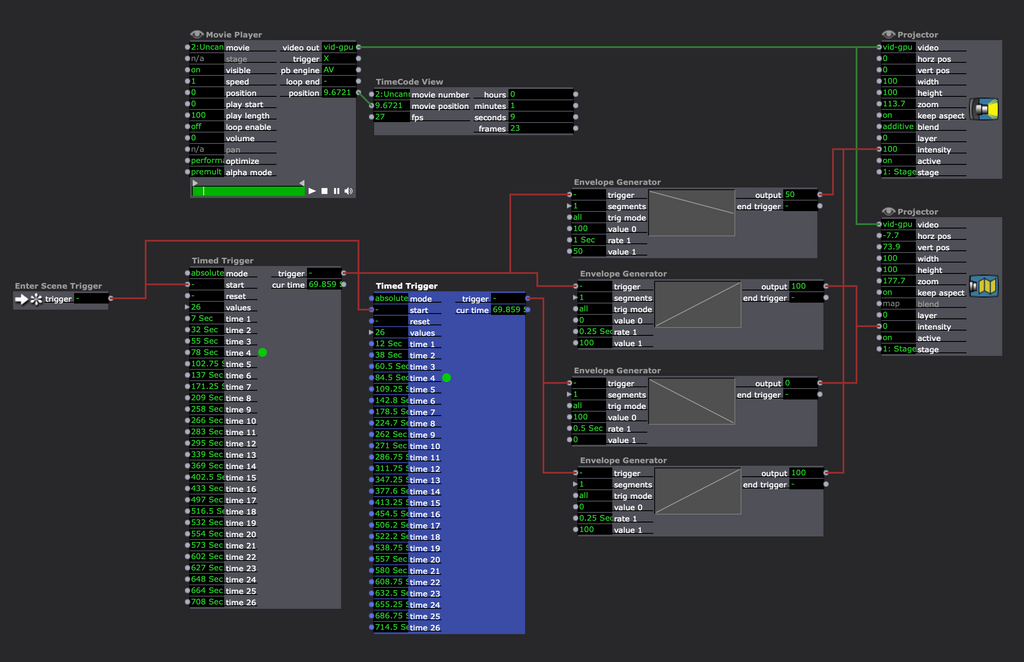

I wanted to create triggers from multiple movie positions in Movie Player, and created this patch (see the screenshot below). I couldn't figure out which actor I could use to take the value of a precise movie position to trigger envelop generators. The only way I could think of was to convert movie positions into actual time using TimeCode View actor and did mental calculations to input numbers in Timed Trigger actors. Can someone tell me the more efficient and precise way of doing this...?

Thank you!!

So I'm setting up a theater piece with 2 x TH2Go (display port edition).

Real HDMI cables to these 3840 x 1920 displays work fine, even quite long (20m) runs.

But, because we have some even longer runs, we also need to use some Blackmagic HDMI / SDI Microcoverters. Problem is, the TH2Go refuses to recognize the BM Microcoverters – the white "I've got a signal" light near the HDMI input never turns on. Any ideas?

Thanks in advance for the quick help!

Mark

Hi all,

for an upcoming project, I need to use my IDS DBK33GX462 Gigabit Ethernet camera as a live input in Mac OS 10.15.7 Catalina. It doesn't show up in the devices list under "live capture settings".

(and the camera manufacturer The Imaging Source doesn't provide any drivers nor software for Mac OS...)

Any chance I can make it happen ?

Hi everyone

My question might be a weird one. I have used Isadora with high school students several times for projects (poetryfilms) in my creative writing classes. We've built interactive films using an XBox kinect and it has worked OK. But up until now, the collaboration has been with me on my laptop, which is loaded with Isadora and the OpenNi tracker plugin. My students' role was to write their poems and create the media that they wanted to use with the adobe suite and various other software tools. Based on their vision, I have been the one creating the patches with their media and poetry. So when we test and record the films, we are using my laptop and they are the ones moving in front of the kinect camera. It has been kind of clunky, but the kids have been happy with the collaboration for those particular classes (which are writing classes not computer classes).

This spring, I'd like to actually do a similar unit with my students but rather than building the patches for them, I'd like to teach them some basics on how to use Isadora themselves. So I thought that we'd rent Isadora licenses for a week for each kid. The school is willing to pay for the rentals so that is good, but I am having a hard time imagining how we will all share a my personal kinect camera in an efficient way, and it is definitely out of budget to purchase more kinects. On the other hand, if this one unit goes well, I may be able to convince the school to buy a bunch of perpetual licenses and more equipment so that I can do a much bigger project next year.

Anyway, I have this vague idea that I will create a standard patch to get the students started on their own laptops and then teach them how modify it with other actors. But how do I emulate the kinect input so that they can all work on their individaul projects simultaneously? I was thinking that they could use a mousewatcher actor as input to give them a rough idea of the interactivity while they wait for their individual turns at the camera. Or perhaps could I record a person's movement and have the kids use that recording with their patches while they wait their turns, but it seems like neither of these solutions would emulate the depth that we get with the kinect.

So I thought that I would ask you all. It doesn't have to be perfect. I just want a way to emulate or fake the kinect input so that all of the students can work simultaneously on their own laptops rather than us all sharing one. How do I do this given we only have one camera?

Thanks!