Hey everybody.

I'm searching some actor or procedure that allow me to have one part of an image ( video or picture ) in a projector, and another part of the same image in another projector. If someone use Jitter, the closer operation that produce this effect is the objet Scissor that allow you to separate a stage( matrix) into evenly spaced sub stages.

Thanks a lot

Hey guys, i'm triying to do a "Muybridge-Marey inspiration" material with some black and white pictures ( jpg 1mb ) that i put in a picture player and send to a 3D projector to move them in a 3d stage space.

I have 2 questions

a) my computer is very slow I guess the patch is not well done ( Mac RetinaPro, 2018, i9, 6hearts, 32g ram, 2400 mmhz,radeaon pro vega 4 gigas). He is using 35% when Im runing it. I move picture with a Pulse generator, cause I want to be able to change the speed.I didnt make a preview in mp4 so you gonna say "but you can move the speed of the film also", maybe this is an issue?

b) do anyone know how to change a color picture to a black & white in an easy way in Isadora ?

Thanks a lot

@laobra ( Ad Kaplan )

Hi,

I was wondering if someone could help me out with best practices for receiving NDI.

I'm getting a feed through from another computer running touchdesigner

we're connected through the same LAN on which no other computers are present and which is not connected to the internet. |

I do manage to get imagery through to my system however it seems to just be at 5 - 8 FPS

I'm getting a very laggy feed through my ndi watcher, I've tried adjusting some parameters, like the framerate of Isadora or the general service tasks.

The bandwidth from the ndi actor. but I never seem to get rid of the stutter. Also on their end I've tried to adjust the frame rate, etc. Is there anything I'm forgetting to check or adjust?

Or could it be that my system is just old or incompatible with their newer MacBook?

my system:

Windows 10

intel i7-8750H

32 GB RAM

Nvidia GTX 1060

Their system:

Apple Macbook pro M1

OS: Ventura 13.0

would be amazing if someone could share some of their best practices

Abel

I can't seem to find a way to scrub smoothly through a video (using position in the movie player).

Can anyone point me in the right direction?

Hello folks,

I've just finished a tour that uses 2 ultra-short-throw beamers to create a video floor. I map one beamer to the floor using the 4 corners of the standard Izzymap output and then use a 4 x 4 grid to adjust the other beamer's image to match the first one.

The issue i have been encountering is in selecting points to adjust - the corner points of the grid are in the same place as the corner points of the whole map. it seems impossible to select one side of the whole map if the edges of the grid map are sitting along that side.

I was wondering if there is a keyboard shortcut to help select either the grid map or the main map? or could the main map handles be bigger? or on little stalks so that they extend beyond the corners? and be able to shift click 2 of the handles to select one side? or the outside of the handle be connected to the main map and the inside to the grid map?

dear all

Need some help. I have upgraded frim v2 to v3

Much used facilaty on v2 was when setting up screen set up I could move the mouse accross comp screen and main stage projection ( stage 1) would show corner’s and mouse. So with blue tooth mouse and main comp on stage I could adjust stage size form front seats of theatre. is this facilaty available on V3? Cant find it can get set up screen but cant adjust on main stage

Transmedia

I'm here to ask an Help!!

I'm serially Interesting to run My Performance on Binary & Text Watcher, but the material of study is just it, or can i optane more of it???

The same discourse is valide for like active the synth on isadora, for example i can work with analog hardware music with other software, but I'm an older sympathizer of this easiest Software that is your...

Thank's guys

Hi there,

I am hoping there is a solution for this:)

I am working on a project, where I would like to be able to layer some live feed like a collage. We are making a performance where a book comes to life, and the book is made of collages of e.g. leaves and little figures. I would like to be able to make a moving background, where I can enact with little figures in front of it, (I have imagined a green screen, but it is difficult to find a color to key out, so I am wondering i there is other ways). Is there a way to track hands moving objects objects and cut them out from a surface to add them to an image? I dont know if this can be done in eyes ++, but if it is possible, then I can spare a videoscreen in the background and a camera pointing on to that - because it seems like a bit of a clumsy setup - but this is so far my imagination.

My dream would be to e.g. use a black back ground or any other background, but since there is some black lining in the figures, then I dont know how to work this. Any ideas are welcome - I hope it makes sense:) I have added a pic of the figures.

How to get the figure from the bottom to move into the image with a live camera and hands moving him? Can I do that?

All the best Eva

Hi there.

I play with live tracking with kinect / shapes and the matrix color send / artnet send actor.

Well...that's work (but as a millumin or resolume user, i missed one or two things to stay in isadora for that purpose

Idea here is to pixel map video with 4 led strip of 10 px.

If i make a 10px with pixel size, i will be to lilttle in the media file.

If i make one pixel size with 10 repetition, i can space them to the media : that's cool but :

> we don't really have a good visualisation of where the pixels stands in the media. Perhaps a size for px visualisation should be great. And a media preview replacing the green screen on the actor? Perhaps a grid to so we can put pixels strip align...

> we cannot reverse order of pixels : it is from 1 to 10. Sometimes it is helpfull to reverse from 10 to 1 without entering the artnet patch  (when going with 100px it's so long) Rows/cols are cool, missing the flip thing...(and perhaps a rotation so we can make diagonals ?)

(when going with 100px it's so long) Rows/cols are cool, missing the flip thing...(and perhaps a rotation so we can make diagonals ?)

I found to some error when playing with offset (when doing to much, it change low and hight channel, but stay with this value, sometimes not refreshing in real time so i have to jump to another cue et load back.

Other ask here...is there a .obj / .fbx chance for 3D Models ? Work with blender for 3D purpose, and it's not export .3DS anymore (find a python patch wish not work)

I amasingly controle 3D object in blender from isadora with OSC and that's work well, waiting a syphon blender output to remap back in isadora using syphon receiver

Cheers !

Michaël

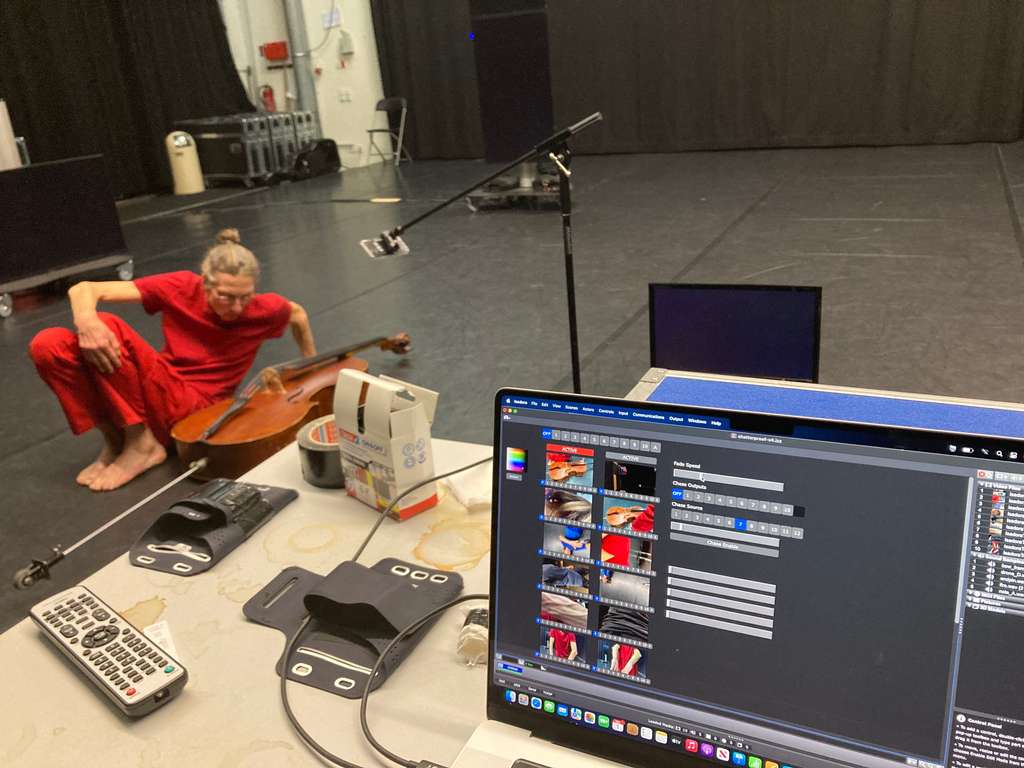

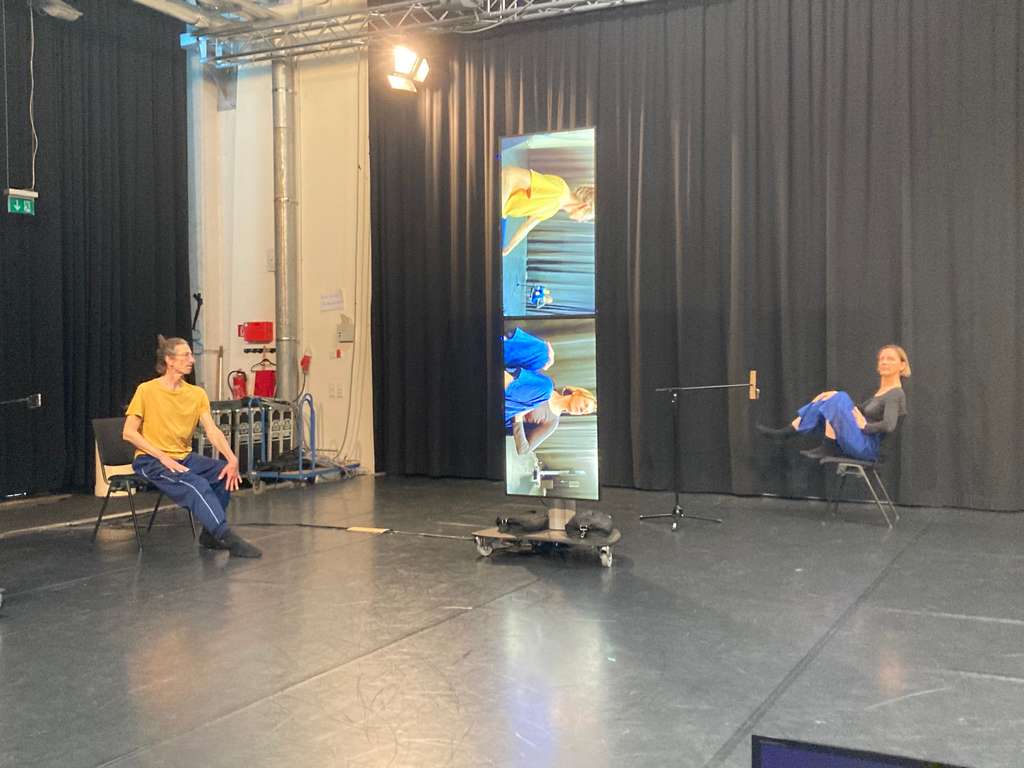

Some final pictures and reflections from our research project "Shatterproof", conceived and directed by Sten Rudstrom, and performed by Ingo Rülecke and Ulrike Brand. I was so fortunate to work with such talented, generous and creative souls for this work.

The piece itself is concerned with how we regard the human form when it is pitted against video imagery. I used Isadora to capture live feeds from iPhones which the performers could place on their bodies or in various locations within the space. Then I performed a "live mix" of those images on as many as 8 displays. The mandate I received from Sten (the director) was to oscillate between two polarities: one where I attempt to tear the viewer's eyes away from the compelling improvisations of the performers, and another where I relax that tension by removing imagery from the displays or showing a single image on one of the smaller monitors. The goal in all of this was to raise the viewer's awareness of their own feelings as they are pushed and pulled them towards (or away from) the imagery and the performers.

It was such a great process for me. The piece is totally improvised – there is no script whatsoever for this work. This means the images I am able to capture are never the same. Because of this, I simply had no choice but to be utterly present to the material and relationships offered to me by the performers. I really felt ***SO**** alive with them as each rehearsal or performance progressed.

Thanks again to Sten, Ulrike and Ingo for such an enlivening process.

Technically, in Isadora, I created a very flexible 13 by 8 matrix router: I could choose any of 10 movies or three lives feeds (Three NDI Watchers receiving live video from the iPhones) as sources, and route these to any of the 8 displays in the room. For the displays that were doubled-up, I could choose to send an individual image to each display, or to send a single image to both, so that the two deploys formed as a single image. (See the face of the 'cello player in the first picture for an example.)

The patch ran on my MacBook Pro M1 Max, which offers up to three video outputs. It worked beautifully. It never showed a LOAD of more than 10% even while playing 10 movies as the same time and receiving three NDI feeds from the iPhones.

I originally tried two Triple Head 2 Go's to reach feed the eight diplsay, but failed to get them to work together. (They apparently had a differing tables of output resolutions/frequences, so they the Matrox software said they could not be used on the same computer.) In the end, I was able to get eight outputs as follows:

1. Data Path FX 4 (four)

2. Triple Head 2 Go (three)

3. Built-in HDMI output. (one)

So, aside from three days of mayhem at the beginning getting things to work, once I had this setup, everything worked beautifully for the remainder of the rehearsal period.

Pro Tip #1: If you ever try to use Blackmagic HMDI <-> SDI converters, you'll run into some issues if the resolution and frequencies aren't to their liking. From what other users have told me, using CAT 5 extenders for the HDMI is a much better bet.

Pro Tip #2: Make sure to turn off the HDCP copy protection in the Data Path FX 4. If you see solid red images at the output, it is turned on!