For the augmented reality (AR) stuff I saw on their website, you need to be able to write applications for a smartphone/tablet I believe, and it looks like they either bought or rented a ton of tablets (which can get expensive quickly).

Other observations:

- Infrared tracking (such as using the prop vacuum to affect a particle system projected from above) was heavily used in the works shown on both websites. It's one of the only ways to track multiple bodies in space regardless of what you're projecting onto and around those bodies and, importantly, also being unaffected by both bright and low-light conditions

- Edge-blending of multiple projectors and projection mapping (projecting on the cube, onto the floor and up the walls, and covering large areas)

- Projectors higher than commercial-grade that are capable of top-down projection (projecting straight onto the floor is something that some commercial-grade projectors aren't designed to do, so they can overheat and you might end up with several different problems)

Sadly the most important components for large-scale, interactive installations like this are money (for equipment, technicians, space rental, etc) and time (which is also basically money, as in the several months it takes to make such large-scale installation work, you still need to pay bills, rent, buy groceries so you can eat, etc.)

This may not be applicable in your case, but for anyone else reading this who might find this advice helpful: You can make some neat interactive installations, or test out the basic concepts for larger installations, with one or two smaller projectors, a laptop (which has an RGB camera, a built-in display, speakers, and a microphone), and (optionally) a depth camera like a Kinect. Learn how to do body-tracking with IR, create interactive content, perform edge-blending & projection mapping on weird surfaces, and refine your ideas/experiment with techniques on a small scale before trying to go huge.

There is one more value which is important to this. The projector actors 'keep aspect' value. It is set to off in your patch!

Maybe this file will help a bit to understand how scaling works: scaling-example-2024-02-05-3.2.6.izz

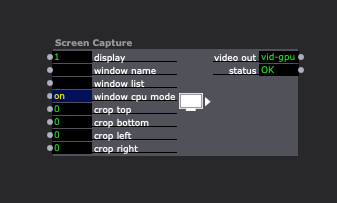

Try changing the Screen Capture actor's "window cpu mode" input property from its default of "off" to "on".

I don't have the possibilities to try it extensively right now, but some quick tests showed the expected behavior regarding the preset and actors mode.

Please accept my apology, if this is obvious. But I'd like to try it anyway. My guess is, your issue results from the different aspects of the content (1920x1080 = 16:9, 800x600 = 4:3, 1080x720 = 3:2). If you mix two different aspects, the unpreferred video is forced into the aspect of the preferred videos aspect. The difference to the scaler is, it always fills the frame (stretches the content), while the mixer might fill the frame with black (letterboxed), depending on the mode. Thats what the h / v modes are for, to decide how to handle those.

@jtoenjes the lens on the Machine vision camera kit (with the IR pass filter) is variable.

You can make some calcualtions here:

https://www.scantips.com/light...

With a distance of 5m on the widest setting (4mm) you get this FOV

| Width Dimension | 8.975m | Width Degrees | 83.82° |

| Height Dimension | 6.65m | Height Degrees | 67.25° |

| Diagonal Dimension | 11.17m | Diagonal Degrees | 96.33° |

You can use the camera sensor spec (1/1.8 sensor size) and the lens spec (3 Megapixels, 4-15.2mm Varifocal) to calcuate the size. This does depend on the camera settings as you get higher framerates with a lower resolution (binned on camera) as well as if you drop to monochrome, there are also non-native aspect ratio options for the camera.

Yes I have used it from above and for tracking the audience from above the projector.

It's a really versatile tracking kit. Just a warning though, Isadora does not support GigEVision cameras directly, however this should work fine with the pythoner actor and this library: https://github.com/genicam/har... I have used this with C++ directly.

Fred

Can you tell me how large of an area the the IR kit can track? Do you/can you hang the camera overhead or works mainly from front view? I do some stage-area tracking and it's always difficult.

John

@dillthekraut Thanks for replying. I think I understand the settings preference meaning. My confusion comes from the, maybe erroneously, assumption that the video mixer actor will scale clips according to the settings preference. That does not seem to the case. When I mix an SD clip with an HD clip rarely do they combine into the "larger to smallest" preference. In fact, sometimes the HD clip fills the horizontal space, but is squished vertically quite out of aspect. Looking at my patch, one can see I tried to solved this issue by putting a scaler actor (set for 1280x720) in front of the mixer actor. This helped. But on occasion the output is still incorrect - mostly one layer fits the monitor while the other layer is slightly more narrow.

The 'default' value 1920 you set, is used only when you set 'When combining..." to 'scale to default...'. Otherwise the other settings (smalest/largest image) will be used and your custom settings ignored. As I understand, if you set the mixers scaler settings to 'prefs', it still does 'scale to smallest' as you set the main preferences to this.

@woland Thank you! Just want I needed! Thanks - FAS