Hi folks,

whenever I comment my patches or whatever I do with text, I can't use accents like "á, é ,í, ó, ú" or some other charachters. I guess this has to do with FONTS. I remember once seeing Mark that was solved in v2.5 or so and it possibly slipped again in v3.

May be I just have to change the font used by ISADORA, but I can't find where to do it.

Cheers

hello all. i'm starting to dip my toe into a project development phase and am curious about the javascript actor. in particular if the actor can output animated shapes?

the problem i'm trying to solve is around dynamic shape making for example a 10 sided shape displaying 5 of the lines in a display line, not display line etc and by changing the input number from 10 to say 24, instantly seeing 12 lines.

i am aware of my limitations of describing what i am attempting.

i'm trying to understand what the javascript actor does best.

Hello everyone ! I was thinking about the AI tools that are available today... Which of them do you think would be interesting to be able to link with Isadora?

What other ideas or needs using AI do you see where Isadora can be linked for artistic creations? I share these concerns with you to see what you think

Big hug

Maxi- RIL

For large actors (like 3d ropes or particles) or large user actors and jump cursor to input would be great. Highlight the actor, press command + j and then start to type, it will filter the inputs in a tree, so if you type gr when using the ropes actor all the inputs with a label startign with gr will be highlighed, pressing tab will move the cursor to the next input that matches the filter. If the input is out of view, the screen will scrcol so that the input with the highlight is centred.

This should be an easy one. In reference to a few recent posts about control surfaces, there was several mentions of using the HUI protocol from Isadora to send and receive to desks with motorised faders. Implementing this protocol with actors seems pretty ineffcient, and better done with javascript. It wouldn't reqiure access to midi ports inside the javascript actor, but a complex midi parser could be built there if there was a receive raw midi actor that could listen to all raw data on a given port. To do this would also require a 'real' send raw midi actor (or a new version with a raw code input, not the params/parameter model that it uses now. Using such a send raw midi, all midi comands could then be formatted in javascirpt and sent via the send raw midi actor, and Isadora can still manage midi ports.

If Isadora patches could be represented in an abstracted text format (I guess some kind of JSON or other common data format) that described the control flow, connections and default values (including code in any of the text entry actors like text parser or javascript) it would be very helpful for the future. This would mean that Isadora patches could be interpreted by LLM's like chat GPT, and that LLM's could be used to generate patches and suggestions for solutions. Probably won't happen in a hurry but it would definately make Isadora forward facing. Extra points to add an LM inside isadora that could opt in share our patches, and eventually act like copilot.

Hi there,

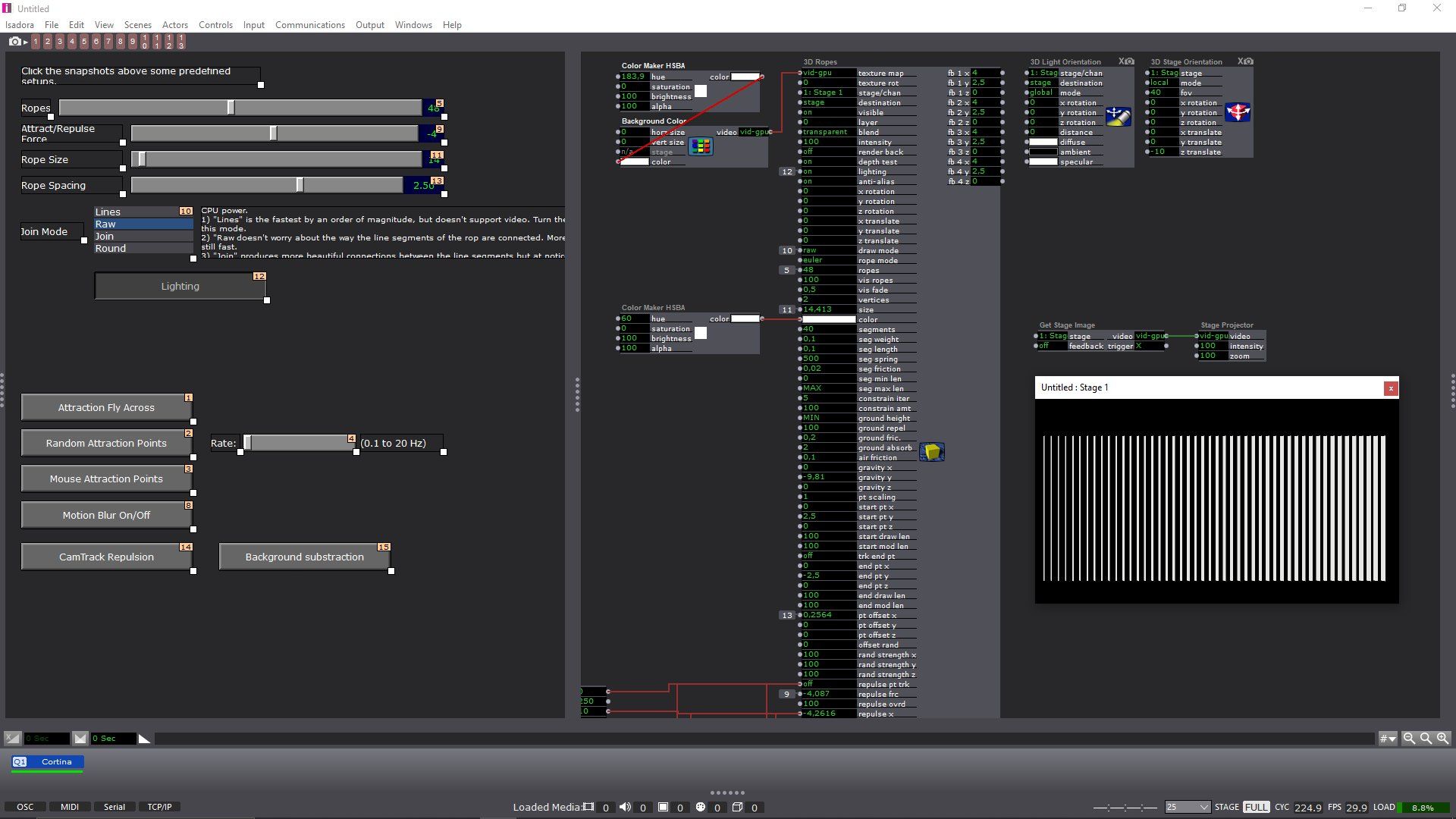

Quite some time ago I was playing around with the Patch from Guru Session #7 (If I'm right) ... and I'm unable to get a "curtain" with an homogeneous rope thickness, unless selecting the "line" draw mode.

I'm attaching a Screenshot for better understanding.

Setting "draw mode" to "line", all ropes are the same thickness, but any other draw mode will lead to the right ropes appearing progressively thicker. I guess it is a lighting issue, since the "line" mode does not admit lighting. I'v played around with the 3d Light & 3d Stage orientation actors, but have not been able to get an even courtain.

This is nothing urgent, but something itching back in my head for quite some time & finally I found the time to ask.

Cheers

Re: [OSC hardware controllers](/topic/8223/osc-hardware-controllers)

I'm looking to get motorised hardware sliders (and knobs) to better control my Izzy patches. I've been using my good little NanoKorg II (thanks @DusX for your user actor).

In my search I came across the somewhat older Post (Dec 22) referenced above and somewhat surprisingly there don't seem to be almost any OSC Hardware controllers out there.

Well, I've found this one (LS Wing) but there is only very limited information about it. It is somewhat expensive 980€, but seems to be a very global player.

https://plsn.com/featured/equi...

https://products.equipson.es/ls-wing

... which is weird, since the post is from 2019. I would have thought that many people would have been expecting something like this and find some user reports. But I can't find anything.

If anyone has more information it would be great if you can share it. Otherwise, I'm happy to share the finding with you.

Interactive Networked or In-person/Virtual Orchestra performance

I wanted to share these 2 events below. Was I started out using Isadora my goal was to try and make something visual that matched what I was doing sonically. I'm using ZoomISO to move video from Zoom, and Cleanfeed to connect audio from everyone's locations. To sync our playing we are using a number of musical methods and approaches, ears and musicianship basically. I think it's really interesting because it makes a familiar but slightly different piece or experience for each person connected. Since we're playing with the latency and tapping into a broader musical sense we're able to play as though we're in-person in some ways and also something similar to what I believe large marching bands manage. We're planning a lot of shows this coming season, the 2 clips below are from June. We have a good size roster of players now and we're looking for more collaborators if anyone knows people or places interested in hosting feel free to send me a message (trevor@enjinnarts.org). Thanks! Trevor

“Cohere Touch”, a Networked Piece and Augmented Reality System for Solo Viola, Virtual Musicians, and Orchestra.

Cohere Touch is the second piece in a series of pieces written for decentralized simultaneous telematic performance, that reveals and plays with the connections we have all around us. “Touch” in the title comes from participants being in one physical space and feeling the other networked locations and music, effecting them with movement and sound, essentially touching the shared canvas physically and sonically. In addition to being a musical piece the Cohere Touch system is also a specially designed augmented reality system that allows performers and audience to play music together, and effect their own in-person space as well as the other networked spaces participating in the piece.

Make Music Day 2023

Through a parternership EnJinn Arts with The Art Effect Trolley Barn (489 Main St. Poughkeepsie, NY 12601) on June 21st, 2023, you are invited with participants from all over the world to participate in a FREE globally-connected music making performance of music through augmented reality, and featuring performers from all over the world and 3 performances of "Cohere Touch" a piece and AR system created by Trevor New.

"Bring your instruments or just yourselves and lets make music

that wraps around the globe!"

Hello all,

for those who are interrested in the history of sensing devices

tells the story of David Holz, the founder of Leap, who refused to be bought by Apple, twice, and later became the founder of Midjourney.....

Cheers